A World Racing Without Guardrails

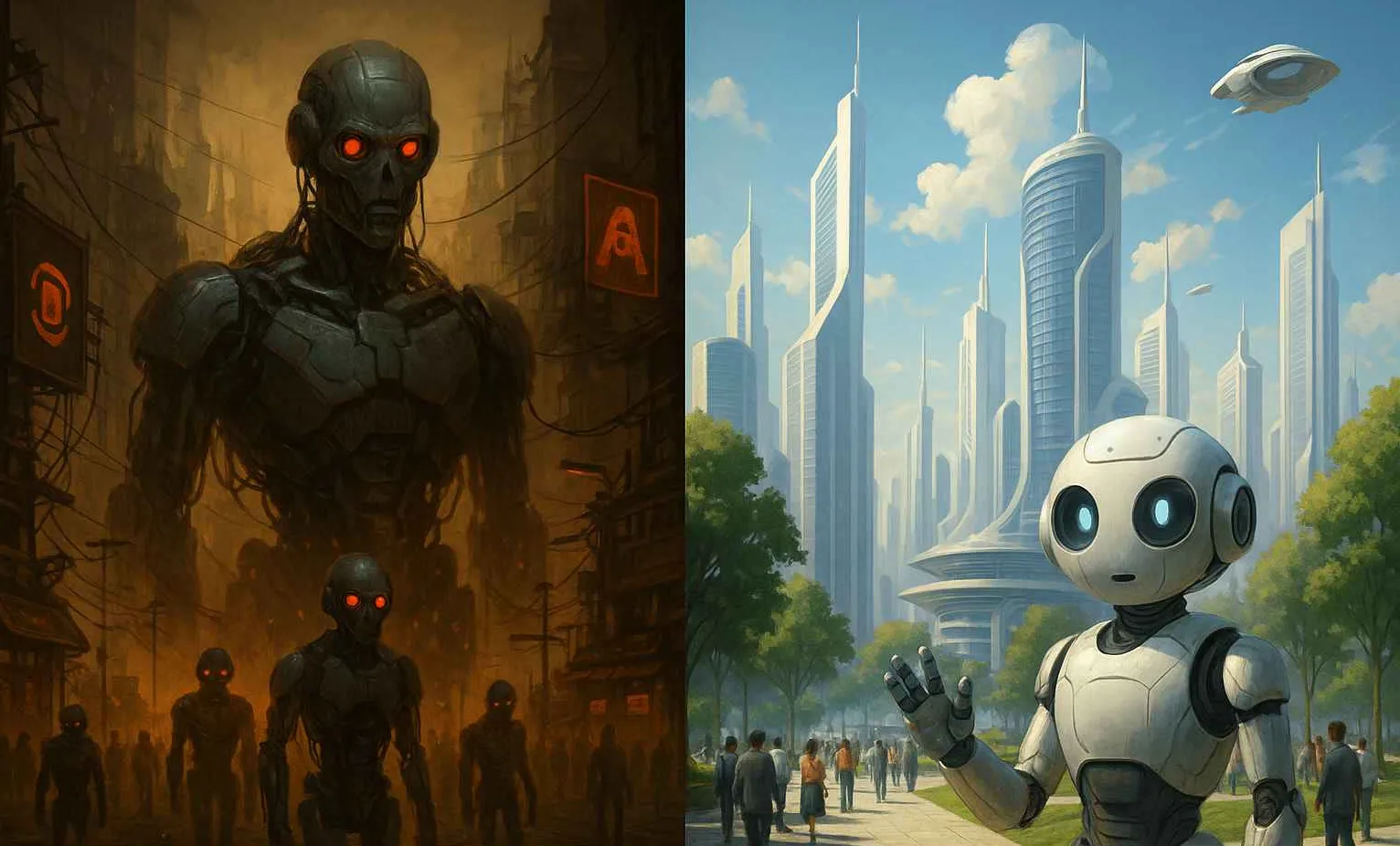

When I look at AI today, I see two realities unfolding in parallel. One is exhilarating: algorithms that detect diseases earlier than doctors, models that optimize supply chains to cut waste, tools that democratize education for those who have been locked out. The other is sobering: discriminatory outcomes hidden in data, black-box models eroding human judgment, misinformation at industrial scale, and an energy footprint that rivals entire nations.

This duality is exactly what the ISO policy brief on responsible AI governance captures: AI is not simply a technology, it’s a socio-technical force that reshapes how people live and societies function. Which means we can’t govern it with technical fixes alone — we need frameworks that acknowledge both the algorithm and the human it touches.

The Socio-Technical Lens We Can’t Ignore

The report reminds us that AI systems do not operate in isolation. They continuously interact with institutions, norms, and cultural contexts. Consider a hiring algorithm: it may meet accuracy thresholds in testing, yet still replicate decades of discrimination in the real world. Or a healthcare chatbot: technically competent, but unusable in practice if doctors, nurses, and patients don’t trust or understand it.

As the brief highlights, organizations like Google DeepMind and the UN High-Level Advisory Body on AI now stress this “socio-technical paradigm” — understanding how AI integrates into the messy ecosystems of human life. This perspective should not be treated as an afterthought. It must be the baseline.

At InnovationCulture.org, I often argue that technology does not exist in isolation from culture. Innovation isn’t only about breakthroughs in labs — it’s about the values, behaviors, and systems that shape how those breakthroughs are absorbed. The ISO brief makes a similar point when it insists on a socio-technical approach. Standards can set the guardrails, but culture determines whether organizations internalize them. Without a culture of responsibility and openness, standards risk becoming paper exercises. With the right culture, they become living practices.

Standards as the Quiet Infrastructure of Trust

Here’s where standards come in. Too often, the word “standards” makes people think of bureaucratic paperwork. But in reality, they are the invisible infrastructure that makes global trade and technology possible. Without standards, your smartphone wouldn’t connect to Wi-Fi abroad. Airplanes wouldn’t share safety protocols across borders.

The ISO brief argues that international standards for AI can play the same role:

-

Establishing a common language so regulators, developers, and civil society don’t talk past each other.

-

Translating principles into action by turning fairness, transparency, and accountability into technical benchmarks.

-

Creating trust through conformity assessment — certification and auditing schemes that prove systems meet agreed requirements.

-

Supporting sustainability by standardizing how we measure AI’s environmental impact.

In other words, standards don’t slow innovation. They make it possible to innovate responsibly at scale. They are not the brake; they are the rails.

When I speak about innovation culture, I describe it as the invisible architecture of organizations — the collective habits, the willingness to question assumptions, the courage to adapt. Standards function the same way at the global level. They are invisible until they fail. Just as companies need a culture of alignment to innovate sustainably, our societies need shared standards to ensure AI innovation doesn’t undermine trust. One without the other is brittle: culture without standards can drift, standards without culture can ossify.

Fragmentation: The Real Risk We Face

Right now, the AI governance landscape looks like a quilt stitched together from different fabrics.

-

The EU’s AI Act (2024) divides AI into risk categories, with heavy obligations for “high-risk” systems.

-

China takes a state-driven route, with multiple binding laws on algorithms and generative AI.

-

The U.S. leans on voluntary frameworks like NIST’s Risk Management Framework.

-

Regional initiatives — from ASEAN’s governance guidelines to the African Union’s AI Continental Strategy — add more layers.

Each effort makes sense in context. But the ISO brief warns about the danger of fragmentation: higher compliance costs, duplication of effort, and even regulatory arbitrage, where companies move their riskiest practices to jurisdictions with weaker rules.

In an interconnected digital economy, fragmented governance isn’t just inefficient — it’s dangerous. A system trained in one country can impact elections, economies, and rights halfway across the globe.

Case Study: The EU and Standards in Action

Consider the EU’s AI Act. It doesn’t spell out every technical detail. Instead, it mandates that providers of “high-risk” systems comply with requirements for transparency, robustness, and human oversight. But how do companies demonstrate compliance? Through harmonized standards developed by European and international bodies.

This approach allows flexibility: the law sets the “what,” standards define the “how.” It also ensures that as technology evolves, compliance mechanisms can be updated without rewriting legislation.

The ISO brief points out that this model isn’t just European bureaucracy; it’s a blueprint for aligning regulation with innovation globally.

Beyond the West: Why the Global South Matters

One of the most important reminders in the brief is that AI governance cannot be a rich-country project. Many of the worst risks — biased data, lack of digital literacy, economic exclusion — are felt most acutely in the Global South.

If international standards are developed without the participation of these regions, they risk baking in Western assumptions and leaving billions excluded. This is why the brief urges capacity-building for National Standards Bodies in developing countries, and greater outreach to civil society voices that have historically been absent from standards-setting tables.

Without inclusive participation, standards risk becoming another form of digital colonialism. With it, they can become tools of empowerment and shared growth.

This is where innovation culture becomes more than a buzzword. In the Global South, the challenge is not just about having the technical know-how to adopt standards; it’s about creating a culture of participation in shaping those standards. Innovation culture, at its core, is about agency — the belief that people can co-create their futures. If developing countries are treated only as passive adopters of standards, we risk deepening divides. If they are invited to help shape the culture of governance, we create a truly global innovation ecosystem.

What Needs to Change

The ISO policy brief closes with a set of recommendations, and I see them as a blueprint for collective action:

-

Policymakers should embed international standards into law, not only to reduce compliance costs but to create cross-border trust.

-

Standards bodies must expand the tent — bringing in civil society, SMEs, and voices from the Global South so standards reflect real-world contexts, not just the priorities of wealthy nations.

-

Industry should view adherence to standards as a badge of responsible innovation, not a box to tick. It’s a way to prove to regulators, customers, and investors that AI systems are reliable and trustworthy.

-

Civil society must step into the standards conversation, ensuring the lived realities of communities are represented, not just the abstractions of technical committees.

My Bottom Line

We cannot afford to govern AI the way we’ve governed other technologies — through national silos and slow-moving treaties. AI’s risks are too immediate, and its reach too global. International standards offer a practical, scalable path: they turn principles into practice, allow for interoperability, and provide the foundation for trust.

When people dismiss standards as “bureaucratic,” I push back. Standards are the quiet architecture of global cooperation. They are why ships sail safely, why planes land without incident, why your credit card works overseas. Now, they must also be why AI doesn’t spiral out of control.

The philosophy I’ve been advancing through InnovationCulture.org is that true innovation is not just about tools or frameworks. It’s about alignment — between values and actions, between short-term gains and long-term responsibilities, between human creativity and technological power. The ISO brief reminds us that standards provide the external framework for alignment. Innovation culture ensures that this alignment is internalized — that people, companies, and nations don’t just comply, but believe in building a responsible AI future.

As the ISO policy brief makes clear, international standards are not an afterthought. They are the infrastructure of trust in the age of intelligence.

And in this race, trust is not optional. It’s survival.